2021.02.01

Introducing Human Guidance into Reinforcement Learning via Rule-based Representation

SOKENDAI Publication Grant for Research Papers program year: 2020

Informatics Nicolas Bougie

情報学コース

Towards Interpretable Reinforcement Learning with State Abstraction Driven by External Knowledge

journal: IEICE Transactions on Information and Systems publish year: 2020

DOI: 10.1587/transinf.2019EDP7170

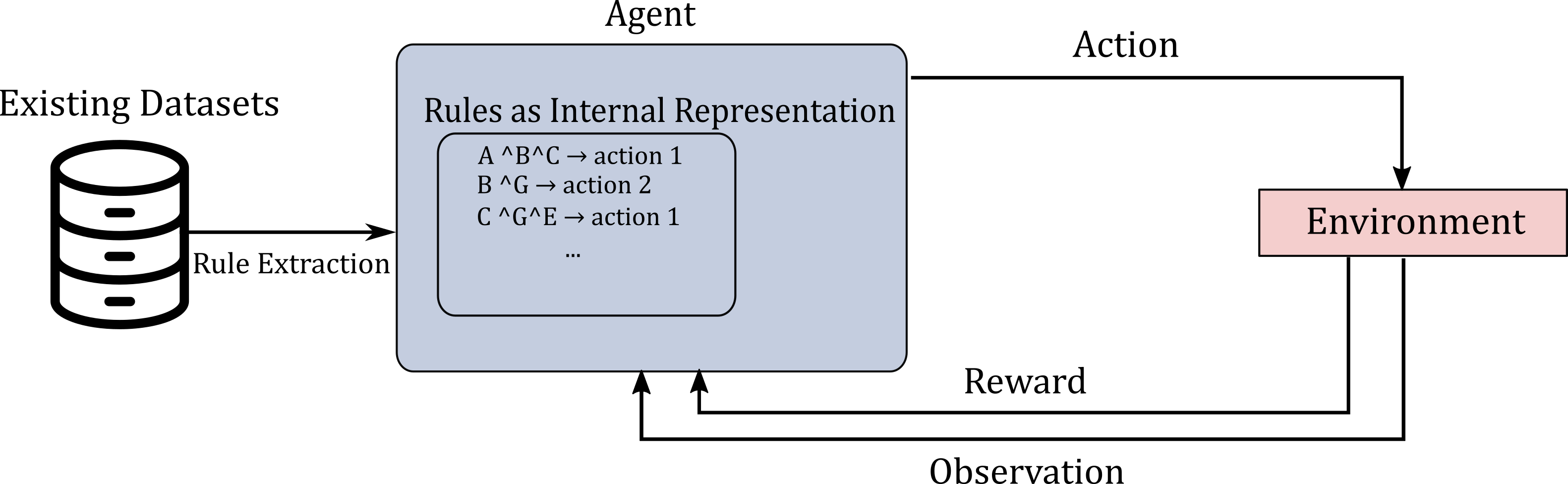

The agent's internal representation is based on rules automatically extracted from existing datasets. The rules are extracted offline and then used as the internal states of the agent. A rule (e.g. A^B^C) is a conjunction of variables (e.g. A, B, C) that represent meaningful patterns in the environments. During the training process, the agent learns a policy that maps the rules to the actions via interactions with the environment.

Reinforcement provides an end-to-end formalism for learning policies via trial-and-errors with an environment. It consists of an agent learning a policy by interacting with an environment. Even though learning from scratch is extremely convenient, it is often unrealistic to expect a reinforcement learning system to succeed with no prior assumptions about the domain. Especially, learning from scratch often induces huge amounts of interactions to reach reasonable performance, which can be impractical or infeasible in real-world settings. As a result, reinforcement learning is not yet in wide-spread use in the real world.

In order to overcome these challenges, our method (rule-based Sarsa(λ)) leverages human guidance, drastically reducing the learning workload and facilitating various forms of common sense reasoning. We present a novel form of guidance based on existing sources of knowledge (e.g. datatests) that add no human effort while improving the agent's sample efficiency. The core idea is to build an agent whose internal representation is rules automatically extracted from existing datasets related to the task being learned (cf Figure). We contribute different strategies to discover these rules; and then build a reinforcement learning agent whose internal representation is based on these rules. We further show that the natural structure of the rules can be used to improve generalization and extract a humanly-comprehensible chain of reasons for the action choice of the agent (i.e. interpretability).

We evaluate our method on different tasks including trading, and visual navigation. We found that the depicted form of guidance is crucial to learn both more efficiently and effectively. In the future, we hope to apply our method to real-world problems.

Bibliographic information of awarded paper

- Title: Towards Interpretable Reinforcement Learning with State Abstraction Driven by External Knowledge

- Authors: Nicolas Bougie & Ryutaro Ichise

- Journal Title: IEICE Transactions on Information and Systems

- Publication Year: 2020

- DOI: https://doi.org/10.1587/transinf.2019EDP7170

Department: Department of Informatics Nicolas Bougie