January 05, 2022

Eff ects of Image Processing Operations on Adversarial Noise and Their Use in Detectingand Correcting Adversarial Images

program year: 2021

Informatics NGUYEN Hong Huy

Informatics

Effects of Image Processing Operations on Adversarial Noise and Their Use in Detecting and Correcting Adversarial Images

journal: The IEICE Transactions on Information and Systems publish year: 2022

DOI: 10.1587/transinf.2021MUP0005

https://search.ieice.org/bin/summary.php?id=e105-d_1_65

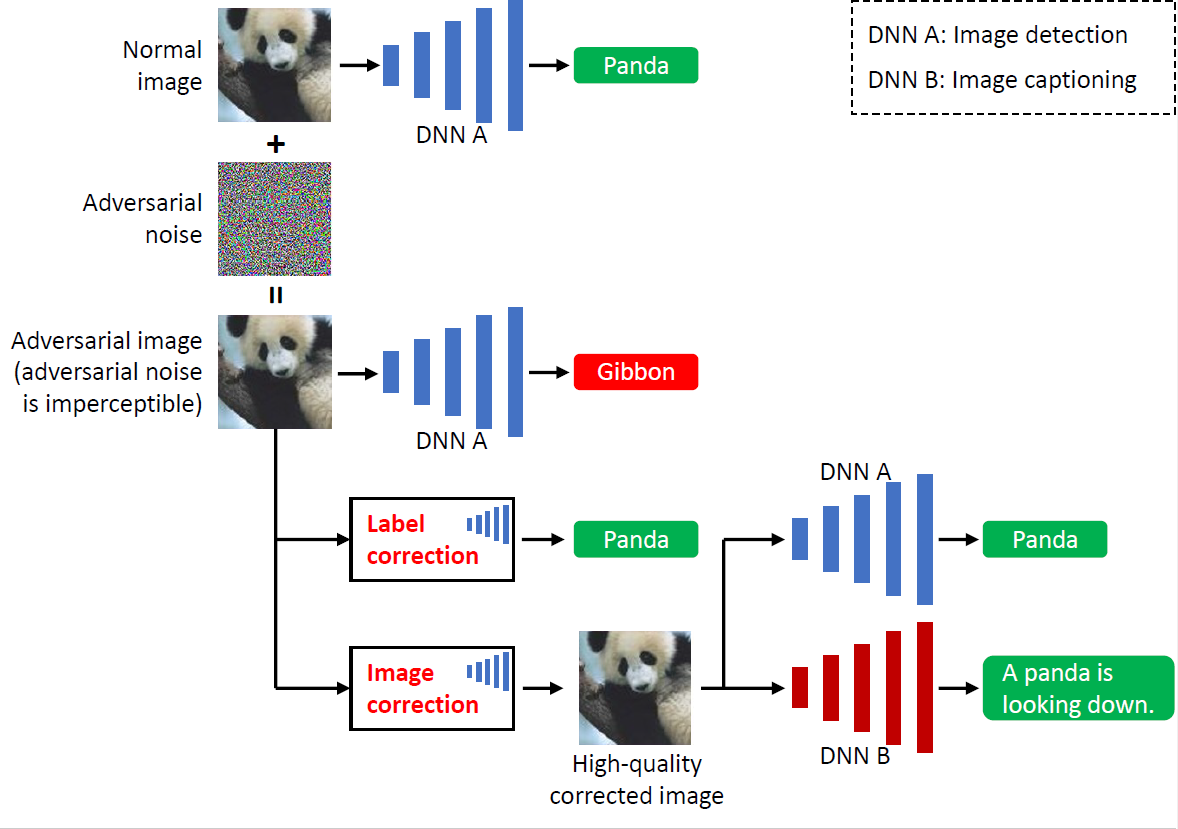

The adversarial noise was added to the panda image so that DNN A misclassified it as a gibbon. For label correction, the correct label predicted by DNN A was restored. For image correction, the adversarial noise was mitigated so that both the targeted DNN (DNN A) and another DNN (DNN B) correctly worked on the corrected image.

Thanks to their high performance, deep neural networks (DNNs - also known as artificial neural networks) have been widely applied in many aspects of our daily life, industry, and academia. A simple example of the application of DNN is the model used in a smartphone to recognize faces. Image processing and computer vision are the two domains that are currently heavily relying on DNNs. However, DNNs are vulnerable to adversarial attacks in which tiny noises or patterns (which are imperceptible for humans) are added to the networks' inputs (forming adversarial examples) to alter the DNNs' outputs. Such attacks are troublesome, especially in mission-critical applications such as autonomous vehicles or medical imaging. Moreover, adversarial examples can be used to poison training data to corrupt DNN models.

In this work, we proposed solutions to detect and correct adversarial examples by using multiple image processing operations with multiple parameter values. We are pioneers to formally define two levels of correction. The first level is label correction, focusing on restoring the adversarial images' original predicted labels by the target DNN. In this way, we restore this DNN's functionality. The second level is image correction, focusing on restoring the original images. Since the adversarial noise is mitigated and the quality of the corrected images is preserved as much as possible, these images behave approximately the same as the original (clean) images. Regarding the performance, our correction method can restore nearly 90% of the adversarial images created by classical adversarial attacks while only slightly affecting normal images in the case of bind correction.

Since the proposed correction methods use multiple image processing operations with multiple parameter values, they are slow and consume high computation power. Our following work is to solve this problem and improve the algorithm so that the correction methods can work with recent sophisticated adversarial attacks.

Bibliographic information of awarded paper

- Title: Effects of Image Processing Operations on Adversarial Noise and Their Use in Detecting and Correcting Adversarial Images

- Authors: Huy H. Nguyen, Minoru Kuribayashi, Junichi Yamagishi, and Isao Echizen

- Journal Title: The IEICE Transactions on Information and Systems

- Publication Year: 2022

Department of Informatics NGUYEN Hong Huy